(Press-News.org)

Scientists hope that quantum computing will help them study complex phenomena that have so far proven challenging for current computers – including the properties of new and exotic materials. But despite the hype surrounding each new claim of “quantum supremacy”, there is no easy way to say when quantum computers and quantum algorithms have a clear and practical advantage over classical ones.

A large collaboration led by Giuseppe Carleo, a physicist at the Swiss Federal Institute for Technology (EPFL) in Lausane and the member of the National Center for Competence in Research NCCR MARVEL, has now introduced a method to compare the performance of different algorithms, both classical and quantum ones, when simulating complex phenomena in condensed matter physics. The new benchmark, called V-score, is described in an article just published in Science.

The study focussed on the “many-body problem”, one of the major challenges in physics. In theory, the laws of quantum mechanics give you everything you need to exactly predict the behavior of particles. But when you have several particles interacting with each other, as it happens in a complex molecules and crystals, this calculation becomes impossible.

A good example of a many-body problem is when physicists and chemists need to calculate the ground state of a material, that is its lowest possible energy level and tells you whether the material can exist in a stable state, or how many different phases it can have. In most cases, scientists must make do with approximating the ground state, rather than calculating an exact solution. There are several techniques and algorithms to approximate the ground state for different classes of materials, or for properties of interest, and their accuracy can vary a lot.

Enter quantum computing. In principle, a quantum system – like the qubits that make up a quantum computer - is the best way to approximate another quantum system, such as the electronic structure of a material, and several algorithms that allow to calculate ground states on quantum computers have been devised. But do they really offer an advantage of classic algorithms, such as Monte Carlo simulations? Answering requires a rigorous and clear metric to compare the performance of existing – or future – algorithms. “Quantum computing is where theoretical computer science and physics meet”, says Carleo. “But computer scientists and physics may have different views on what constitutes a hard problem”. When companies like Google claim to have achieved “quantum supremacy”, an emphatic term that indicates a clear advantage in using quantum computers over classical ones, they often base their claims on solving computational riddles that have no real practical application. “In this article we identify problems that really matter to physics and try to measure how complex they actually are”.

Carleo and his colleagues, who come from several institutions in Europe, America and Asia, started by assembling a library of ground state simulations of different physical systems and based on different techniques. They focused on “model Hamiltonians”, that are functions that simplify interesting phenomena found in groups of material, rather than specific chemical compounds. A typical example is the Hubbard model, that is used to simulate superconductivity.

For some of those functions, an exact solution exists – either from very expensive calculations or from experiments – that can be compared with the result obtained from various algorithms, to estimate their error. Based on that, the research team came up with a relatively simple error metric, that they called V-score (or “variational accuracy”), based on a combination of the ground state energy calculated by the algorithm for a given system, and the energy fluctuation. “To have a good description of a material’s ground state we need an estimate of its energy, that must be low enough to allow the system to be stable, and we need to know that energy fluctuations are close to zero, meaning that if you measure the energy of the system several times the remains the same” explains Carleo. “We found that the combined value of energy level and energy variation in a many-body calculation is highly correlated with the error. We validated the metric on materials for which we have the exact solution, and then we used it to estimate the error for the other cases, typically very large systems”.

This way, the group could identify what are the hardest problems in computational materials science, that are hose where no existing method has a low V-score. The results show that that 1D materials (the class that includes carbon nanotubes) are easily solved by existing classical methods. “This does not mean they are simple problems per se, but that we have good techniques to tackle them” Carleo points out.

Many 2D or 3D materials are also relatively easy. On the other hand, 3D crystal structure made up of many atoms and with “frustrated” geometries – complex arrangements of atoms caused by magnetic interactions – have a high V-scores. The Hubbard model itself, when used on 2-D systems where there is a strong competition between electrons’ interaction and their mobility, proves to be hard for existing methods. These are systems where future quantum computing algorithms may really make a difference.

Carleo explains that the point of the study is not so much to rank existing techniques, but to have a reliable way to assess the advantage brought by new ones that will be introduced. “Our database and codes are fully open access, and we’d like it to become a dynamic resource that is updated whenever a new technique is introduced in the literature”.

END

If you’ve ever struggled to reduce your carb intake, ancient DNA might be to blame.

It has long been known that humans carry multiple copies of a gene that allows us to begin breaking down complex carbohydrate starch in the mouth, providing the first step in metabolizing starchy foods like bread and pasta. However, it has been notoriously difficult for researchers to determine how and when the number of these genes expanded. Now a new study led by The University of Buffalo (UB) and The Jackson Laboratory (JAX) showcases how early duplications of this ...

The biosynthetic pathway of specific steroidal compounds in nightshade plants (such as potatoes, tomatoes and eggplants) starts with cholesterol. Several studies have investigated the enzymes involved in the formation of steroidal glycoalkaloids. Although the genes responsible for producing the scaffolds of steroidal specialized metabolites are known, successfully reconstituting of these compounds in other plants has not yet been achieved. The project group ‘Specialized Steroid Metabolism in Plants’ in the Department of Natural Product Biosynthesis, led by Prashant Sonawane, who is now Assistant ...

A major new study reveals that carbon dioxide (CO2) emissions from forest fires have surged by 60% globally since 2001, and almost tripled in some of the most climate-sensitive northern boreal forests.

The study, led by the University of East Anglia (UEA) and published today in Science, grouped areas of the world into ‘pyromes’ - regions where forest fire patterns are affected by similar environmental, human, and climatic controls - revealing the key factors driving recent increases in forest fire activity.

It is one of the first studies to look globally at the differences between forest ...

A new study shows that an artificial intelligence (AI) tool can help people with different views find common ground by more effectively summarizing the collective opinion of the group than humans. By producing statements that convey the majority opinion, while incorporating the minority’s perspective, the AI produced outputs that participants preferred—and that they rated as more informative, clear, and unbiased, compared to those written by human mediators. Human society is enriched by a plurality of viewpoints, but agreement is a prerequisite for people to act collectively. ...

The health of American democracy is facing challenges, with experts pointing to recent democratic backsliding, deepening partisan divisions, and growing anti-democratic attitudes and rhetoric. In this issue of Science, Research Articles, a Policy Forum, a Science News feature, and a related Editorial highlight how the tools of science and technology are being used to address this growing concern and how the upcoming U.S. presidential election could impact U.S. science.

In one research study in this special issue, Jonathan Chu and colleagues sought to understand whether understandings ...

As climate change promotes fire-favorable weather, climate-driven wildfires in extratropical forests have overtaken tropical forests as the leading source of global fire emissions, researchers report. The findings raise urgent concerns about the future of forest carbon sinks under climate change. Fire has long played a role in shaping Earth's forests and regulating carbon storage in ecosystems. However, anthropogenic climate change has intensified fire-prone weather, leading to an increase in burned areas and carbon emissions, particularly in forested regions. These fires not only reduce forests' ability to absorb carbon but also disrupt ecosystems, harm biodiversity, and pose significant ...

Studies of chemicals in our blood typically capture only a small and unknown fraction of the entire chemical universe. Now, a new approach aims to change that. In a study involving blood samples of pregnant women collected between 2006 and 2008, researchers report having quantified many complex mixtures of chemicals that may pose neurotoxic risks, even when the individual chemicals were present at seemingly harmless levels. “The quantification of 294 to 473 chemicals in plasma is a major improvement compared with the usual targeted analysis focusing on only few selective analytes,” wrote the authors. ...

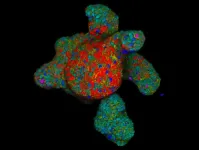

A multi-institutional group of researchers led by the Hubrecht Institute and Roche’s Institute of Human Biology has developed strategies to identify regulators of intestinal hormone secretion. In response to incoming food, these hormones are secreted by rare hormone producing cells in the gut and play key roles in managing digestion and appetite. The team has developed new tools to identify potential ‘nutrient sensors’ on these hormone producing cells and study their function. This could result in new strategies to interfere with the release of these hormones and provide avenues for the treatment of a variety of metabolic or gut motility disorders. The work will be presented ...

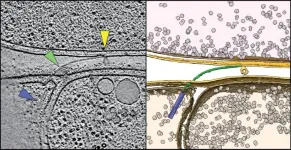

Countless bacteria call the vastness of the oceans home, and they all face the same problem: the nutrients they need to grow and multiply are scarce and unevenly distributed in the waters around them. In some spots they are present in abundance, but in many places they are sorely lacking. This has led a few bacteria to develop into efficient hunters to tap into new sources of sustenance in the form of other microorganisms.

Although this strategy is very successful, researchers have so far found only a few predatory bacterial species. One is the soil bacterium Myxococcus xanthus; ...

"In our everyday lives, we are exposed to a wide variety of chemicals that are distributed and accumulate in our bodies. These are highly complex mixtures that can affect bodily functions and our health," says Prof Beate Escher, Head of the UFZ Department of Cell Toxicology and Professor at the University of Tübingen. "It is known from environmental and water studies that the effects of chemicals add up when they occur in low concentrations in complex mixtures. Whether this is also the case in the human body has not yet been sufficiently investigated - this is precisely where our study comes in."

The extensive research work was based on over 600 blood samples from ...