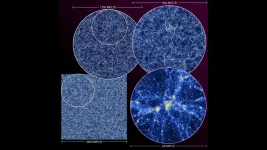

A team of physicists and computer scientists from the U.S. Department of Energy's (DOE) Argonne National Laboratory performed one of the five largest cosmological simulations ever. Data from the simulation will inform sky maps to aid leading large-scale cosmological experiments.

The simulation, called the Last Journey, follows the distribution of mass across the universe over time -- in other words, how gravity causes a mysterious invisible substance called "dark matter" to clump together to form larger-scale structures called halos, within which galaxies form and evolve.

"We've learned and adapted a lot during the lifespan of Mira, and this is an interesting opportunity to look back and look forward at the same time." -- Adrian Pope, Argonne physicist

The scientists performed the simulation on Argonne's supercomputer Mira. The same team of scientists ran a previous cosmological simulation called the Outer Rim in 2013, just days after Mira turned on. After running simulations on the machine throughout its seven-year lifetime, the team marked Mira's retirement with the Last Journey simulation.

The Last Journey demonstrates how far observational and computational technology has come in just seven years, and it will contribute data and insight to experiments such as the Stage-4 ground-based cosmic microwave background experiment (CMB-S4), the Legacy Survey of Space and Time (carried out by the Rubin Observatory in Chile), the Dark Energy Spectroscopic Instrument and two NASA missions, the Roman Space Telescope and SPHEREx.

"We worked with a tremendous volume of the universe, and we were interested in large-scale structures, like regions of thousands or millions of galaxies, but we also considered dynamics at smaller scales," said Katrin Heitmann, deputy division director for Argonne's High Energy Physics (HEP) division.

The code that constructed the cosmos The six-month span for the Last Journey simulation and major analysis tasks presented unique challenges for software development and workflow. The team adapted some of the same code used for the 2013 Outer Rim simulation with some significant updates to make efficient use of Mira, an IBM Blue Gene/Q system that was housed at the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility.

Specifically, the scientists used the Hardware/Hybrid Accelerated Cosmology Code (HACC) and its analysis framework, CosmoTools, to enable incremental extraction of relevant information at the same time as the simulation was running.

"Running the full machine is challenging because reading the massive amount of data produced by the simulation is computationally expensive, so you have to do a lot of analysis on the fly," said Heitmann. "That's daunting, because if you make a mistake with analysis settings, you don't have time to redo it."

The team took an integrated approach to carrying out the workflow during the simulation. HACC would run the simulation forward in time, determining the effect of gravity on matter during large portions of the history of the universe. Once HACC determined the positions of trillions of computational particles representing the overall distribution of matter, CosmoTools would step in to record relevant information -- such as finding the billions of halos that host galaxies -- to use for analysis during post-processing.

"When we know where the particles are at a certain point in time, we characterize the structures that have formed by using CosmoTools and store a subset of data to make further use down the line," said Adrian Pope, physicist and core HACC and CosmoTools developer in Argonne's Computational Science (CPS) division. "If we find a dense clump of particles, that indicates the location of a dark matter halo, and galaxies can form inside these dark matter halos."

The scientists repeated this interwoven process -- where HACC moves particles and CosmoTools analyzes and records specific data -- until the end of the simulation. The team then used features of CosmoTools to determine which clumps of particles were likely to host galaxies. For reference, around 100 to 1,000 particles represent single galaxies in the simulation.

"We would move particles, do analysis, move particles, do analysis," said Pope. "At the end, we would go back through the subsets of data that we had carefully chosen to store and run additional analysis to gain more insight into the dynamics of structure formation, such as which halos merged together and which ended up orbiting each other."

Using the optimized workflow with HACC and CosmoTools, the team ran the simulation in half the expected time.

Community contribution The Last Journey simulation will provide data necessary for other major cosmological experiments to use when comparing observations or drawing conclusions about a host of topics. These insights could shed light on topics ranging from cosmological mysteries, such as the role of dark matter and dark energy in the evolution of the universe, to the astrophysics of galaxy formation across the universe.

"This huge data set they are building will feed into many different efforts," said Katherine Riley, director of science at the ALCF. "In the end, that's our primary mission -- to help high-impact science get done. When you're able to not only do something cool, but to feed an entire community, that's a huge contribution that will have an impact for many years."

The team's simulation will address numerous fundamental questions in cosmology and is essential for enabling the refinement of existing models and the development of new ones, impacting both ongoing and upcoming cosmological surveys.

"We are not trying to match any specific structures in the actual universe," said Pope. "Rather, we are making statistically equivalent structures, meaning that if we looked through our data, we could find locations where galaxies the size of the Milky Way would live. But we can also use a simulated universe as a comparison tool to find tensions between our current theoretical understanding of cosmology and what we've observed."

Looking to exascale "Thinking back to when we ran the Outer Rim simulation, you can really see how far these scientific applications have come," said Heitmann, who performed Outer Rim in 2013 with the HACC team and Salman Habib, CPS division director and Argonne Distinguished Fellow. "It was awesome to run something substantially bigger and more complex that will bring so much to the community."

As Argonne works towards the arrival of Aurora, the ALCF's upcoming exascale supercomputer, the scientists are preparing for even more extensive cosmological simulations. Exascale computing systems will be able to perform a billion billion calculations per second -- 50 times faster than many of the most powerful supercomputers operating today.

"We've learned and adapted a lot during the lifespan of Mira, and this is an interesting opportunity to look back and look forward at the same time," said Pope. "When preparing for simulations on exascale machines and a new decade of progress, we are refining our code and analysis tools, and we get to ask ourselves what we weren't doing because of the limitations we have had until now."

The Last Journey was a gravity-only simulation, meaning it did not consider interactions such as gas dynamics and the physics of star formation. Gravity is the major player in large-scale cosmology, but the scientists hope to incorporate other physics in future simulations to observe the differences they make in how matter moves and distributes itself through the universe over time.

"More and more, we find tightly coupled relationships in the physical world, and to simulate these interactions, scientists have to develop creative workflows for processing and analyzing," said Riley. "With these iterations, you're able to arrive at your answers -- and your breakthroughs -- even faster."

INFORMATION:

A paper on the simulation, titled "The Last Journey. I. An extreme-scale simulation on the Mira supercomputer," was published on Jan. 27 in the Astrophysical Journal Supplement Series. The scientists are currently preparing follow-up papers to generate detailed synthetic sky catalogs.

The work was a multidisciplinary collaboration between high energy physicists and computer scientists from across Argonne and researchers from Los Alamos National Laboratory.

Funding for the simulation is provided by DOE's Office of Science.

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy's (DOE's) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation's first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America's scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy's Office of Science.

The U.S. Department of Energy's Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.