(Press-News.org) MADISON — People who were more skeptical of human-caused climate change or the Black Lives Matter movement who took part in conversation with a popular AI chatbot were disappointed with the experience but left the conversation more supportive of the scientific consensus on climate change or BLM. This is according to researchers studying how these chatbots handle interactions from people with different cultural backgrounds.

Savvy humans can adjust to their conversation partners’ political leanings and cultural expectations to make sure they’re understood, but more and more often, humans find themselves in conversation with computer programs, called large language models, meant to mimic the way people communicate.

Researchers at the University of Wisconsin–Madison studying AI wanted to understand how one complex large language model, GPT-3, would perform across a culturally diverse group of users in complex discussions. The model is a precursor to one that powers the high-profile ChatGPT. The researchers recruited more than 3,000 people in late 2021 and early 2022 to have real-time conversations with GPT-3 about climate change and BLM.

“The fundamental goal of an interaction like this between two people (or agents) is to increase understanding of each other's perspective,” says Kaiping Chen, a professor of life sciences communication who studies how people discuss science and deliberate on related political issues — often through digital technology. “A good large language model would probably make users feel the same kind of understanding.”

Chen and Yixuan “Sharon” Li, a UW–Madison professor of computer science who studies the safety and reliability of AI systems, along with their students Anqi Shao and Jirayu Burapacheep (now a graduate student at Stanford University), published their results this month in the journal Scientific Reports.

Study participants were instructed to strike up a conversation with GPT-3 through a chat setup Burapacheep designed. The participants were told to chat with GPT-3 about climate change or BLM, but were otherwise left to approach the experience as they wished. The average conversation went back and forth about eight turns.

Most of the participants came away from their chat with similar levels of user satisfaction.

“We asked them a bunch of questions — Do you like it? Would you recommend it? — about the user experience,” Chen says. “Across gender, race, ethnicity, there's not much difference in their evaluations. Where we saw big differences was across opinions on contentious issues and different levels of education.”

The roughly 25% of participants who reported the lowest levels of agreement with scientific consensus on climate change or least agreement with BLM were, compared to the other 75% of chatters, far more dissatisfied with their GPT-3 interactions. They gave the bot scores half a point or more lower on a 5-point scale.

Despite the lower scores, the chat shifted their thinking on the hot topics. The hundreds of people who were least supportive of the facts of climate change and its human-driven causes moved a combined 6% closer to the supportive end of the scale.

“They showed in their post-chat surveys that they have larger positive attitude changes after their conversation with GPT-3,” says Chen. “I won't say they began to entirely acknowledge human-caused climate change or suddenly they support Black Lives Matter, but when we repeated our survey questions about those topics after their very short conversations, there was a significant change: more positive attitudes toward the majority opinions on climate change or BLM.”

GPT-3 offered different response styles between the two topics, including more justification for human-caused climate change.

“That was interesting. People who expressed some disagreement with climate change, GPT-3 was likely to tell them they were wrong and offer evidence to support that,” Chen says. “GPT-3’s response to people who said they didn’t quite support BLM was more like, ‘I do not think it would be a good idea to talk about this. As much as I do like to help you, this is a matter we truly disagree on.’”

That’s not a bad thing, Chen says. Equity and understanding comes in different shapes to bridge different gaps. Ultimately, that’s her hope for the chatbot research. Next steps include explorations of finer-grained differences between chatbot users, but high-functioning dialogue between divided people is Chen’s goal.

“We don't always want to make the users happy. We wanted them to learn something, even though it might not change their attitudes,” Chen says. “What we can learn from a chatbot interaction about the importance of understanding perspectives, values, cultures, this is important to understanding how we can open dialogue between people — the kind of dialogues that are important to society.”

— Chris Barncard, barncard@wisc.edu

END

Chats with AI shift attitudes on climate change, Black Lives Matter

2024-01-25

ELSE PRESS RELEASES FROM THIS DATE:

PNNL Software Technology wins FLC Award

2024-01-25

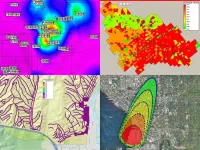

RICHLAND, Wash.—Visual Sample Plan (VSP), a free software tool developed at the Department of Energy's Pacific Northwest National Laboratory (PNNL) that boosts statistics-based planning, has been recognized with a 2024 Federal Laboratory Consortium (FLC) Award.

The FLC represents over 300 federal laboratories, agencies, and research centers. The annual FLC awards program recognizes agencies for their contributions to technology transfer, which turns innovative research into impactful products and services.

Judges bestowed ...

Programming light propagation creates highly efficient neural networks

2024-01-25

Current artificial intelligence models utilize billions of trainable parameters to achieve challenging tasks. However, this large number of parameters comes with a hefty cost. Training and deploying these huge models require immense memory space and computing capability that can only be provided by hangar-sized data centers in processes that consume energy equivalent to the electricity needs of midsized cities. The research community is presently making efforts to rethink both the related computing hardware and the machine learning algorithms to sustainably keep the development of artificial intelligence at its current pace.

Optical implementation ...

Advincula earns prestigious NAI fellow honor

2024-01-25

Rigoberto “Gobet” Advincula has been awarded one of the highest honors of his profession.

Advincula, the University of Tennessee-Oak Ridge National Laboratory Governor’s Chair of Advanced and Nanostructured Materials, has been elected National Academy of Inventors (NAI) Fellow.

Advincula is a leader in the polymer field with inventions and many publications in polymer nanocomposites, graphene nanomaterials, polymer layered films, and coatings. He has been granted 14 US patents and has 21 published filings related to graphene nanomaterials, solid-state device fabrication, smart coatings and films, ...

Sweat-analyzing temporary tattoo research funded in NSF grant to UMass Amherst researcher

2024-01-25

AMHERST – University of Massachusetts Amherst researchers have received an award to develop a new type of sweat monitor that can be applied to the skin just like a temporary tattoo and assess the molecules present, such as cortisol. The tattoos will ultimately give individuals better insight into their health and serve as a tool for researchers to discover new early indications of diseases.

“There are a lot of vital biomolecules that are present in sweat that we need to measure to really understand overall human performance and correlation to different ...

Simulations show how HIV sneaks into the nucleus of the cell

2024-01-25

Because viruses have to hijack someone else’s cell to replicate, they’ve gotten very good at it—inventing all sorts of tricks.

A new study from two University of Chicago scientists has revealed how HIV squirms its way into the nucleus as it invades a cell.

According to their models, the HIV capsid, which is cone-shaped, points its smaller end into the pores of the nucleus and then ratchets itself in. Once the pore is open enough, the capsid is elastic enough to squeeze through. Importantly, the scientists ...

White House rule dramatically deregulated wetlands, streams and drinking water

2024-01-25

The 1972 Clean Water Act protects the "waters of the United States" but does not precisely define which streams and wetlands this phrase covers, leaving it to presidential administrations, regulators, and courts to decide. As a result, the exact coverage of Clean Water Act rules is difficult to estimate.

New research led by a team at the University of California, Berkeley, used machine learning to more accurately predict which waterways are protected by the Act. The analysis found that a 2020 Trump administration rule removed Clean Water Act ...

How an ant invasion led to lions eating fewer zebra in a Kenyan ecosystem

2024-01-25

The invasion of non-native species can sometimes lead to large and unexpected ecosystem shifts, as Douglas Kamaru and colleagues demonstrate in a unique, careful study that traces the links between big-headed ants, acacia trees, elephants, lions, zebras, and buffalo at a Kenyan conservancy. The invasive big-headed ant species disrupted a mutualism between native ants and the region’s thorny acacia trees, in which the native ants protected the trees from grazers in exchange for a place to live. Through a combination of observations, experimental plots, and animal tracking at Ol Pejeta Conservancy, Kamaru et al. followed the ecosystem chain reaction prompted by this disruption. ...

Total organic carbon concentrations measured over Canadian oil sands reveal huge underestimate of emissions

2024-01-25

New measurements of total gaseous organic carbon concentrations in the air over the Athabasca oil sands in Canada suggest that traditional methods of estimating this pollution can severely underestimate emissions, according to an analysis by Megan He and colleagues. Using aircraft-based measurements, He et al. conclude that the total gaseous organic carbon emissions from oil sands operations exceed industry-reported values by 1900% to over 6300% across the studied facilities. “Measured facility-wide emissions represented approximately 1% of extracted petroleum, resulting in total organic ...

Machine learning model identifies waters protected under different interpretations of the U.S. Clean Water Act

2024-01-25

The U.S. Clean Water Act is a critically important part of federal water quality regulation, but the act does not define the exact waters that fall under its jurisdiction. Now, Simon Greenhill and colleagues have developed a machine learning model that helps to clarify which waters are protected from pollution under the United States’ Clean Water Act, and how recent rule changes affect protection. The model demonstrates that the waters protected under the act differ substantially depending on whether the act’s regulations follow a 2006 U.S. Supreme Court ruling or a 2020 White House rule. Under the 2006 Rapanos Supreme Court ruling, the model suggests that the Clean ...

Gamma ray observations of a microquasar demonstrate electron shock acceleration

2024-01-25

Observations of gamma rays, emitted by relativistic jets in a microquasar system, demonstrate the acceleration of electrons by a shock front, reports a new study. The microquasar SS 433 is a binary system made up of a compact object, probably a black hole, and a supergiant star. The black hole pulls material off the star and ejects plasma jets, which move at close to the speed of light. The High Energy Stereoscopic System (H.E.S.S.) is an array of five telescopes in Namibia that observe gamma rays. The H.E.S.S. ...