(Press-News.org) Before he joined the University of Texas at Arlington as an Assistant Professor in the Department of Computer Science and Engineering and founded the Robotic Vision Laboratory there, William Beksi interned at iRobot, the world's largest producer of consumer robots (mainly through its Roomba robotic vacuum).

To navigate built environments, robots must be able to sense and make decisions about how to interact with their locale. Researchers at the company were interested in using machine and deep learning to train their robots to learn about objects, but doing so requires a large dataset of images. While there are millions of photos and videos of rooms, none were shot from the vantage point of a robotic vacuum. Efforts to train using images with human-centric perspectives failed.

Beksi's research focuses on robotics, computer vision, and cyber-physical systems. "In particular, I'm interested in developing algorithms that enable machines to learn from their interactions with the physical world and autonomously acquire skills necessary to execute high-level tasks," he said.

Years later, now with a research group including six PhD computer science students, Beksi recalled the Roomba training problem and begin exploring solutions. A manual approach, used by some, involves using an expensive 360 degree camera to capture environments (including rented Airbnb houses) and custom software to stitch the images back into a whole. But Beksi believed the manual capture method would be too slow to succeed.

Instead, he looked to a form of deep learning known as generative adversarial networks, or GANs, where two neural networks contest with each other in a game until the 'generator' of new data can fool a 'discriminator.' Once trained, such a network would enable the creation of an infinite number of possible rooms or outdoor environments, with different kinds of chairs or tables or vehicles with slightly different forms, but still -- to a person and a robot -- identifiable objects with recognizable dimensions and characteristics.

"You can perturb these objects, move them into new positions, use different lights, color and texture, and then render them into a training image that could be used in dataset," he explained. "This approach would potentially provide limitless data to train a robot on."

"Manually designing these objects would take a huge amount of resources and hours of human labor while, if trained properly, the generative networks can make them in seconds," said Mohammad Samiul Arshad, a graduate student in Beksi's group involved in the research.

GENERATING OBJECTS FOR SYNTHETIC SCENES

After some initial attempts, Beksi realized his dream of creating photorealistic full scenes was presently out of reach. "We took a step back and looked at current research to determine how to start at a smaller scale - generating simple objects in environments."

Beksi and Arshad presented PCGAN, the first conditional generative adversarial network to generate dense colored point clouds in an unsupervised mode, at the International Conference on 3D Vision (3DV) in Nov. 2020. Their paper, "A Progressive Conditional Generative Adversarial Network for Generating Dense and Colored 3D Point Clouds," shows their network is capable of learning from a training set (derived from ShapeNetCore, a CAD model database) and mimicking a 3D data distribution to produce colored point clouds with fine details at multiple resolutions.

"There was some work that could generate synthetic objects from these CAD model datasets," he said. "But no one could yet handle color."

In order to test their method on a diversity of shapes, Beksi's team chose chairs, tables, sofas, airplanes, and motorcycles for their experiment. The tool allows the researchers to access the near-infinite number of possible versions of the set of objects the deep learning system generates.

"Our model first learns the basic structure of an object at low resolutions and gradually builds up towards high-level details," he explained. "The relationship between the object parts and their colors -- for examples, the legs of the chair/table are the same color while seat/top are contrasting -- is also learned by the network. We're starting small, working with objects, and building to a hierarchy to do full synthetic scene generation that would be extremely useful for robotics."

They generated 5,000 random samples for each class and performed an evaluation using a number of different methods. They evaluated both point cloud geometry and color using a variety of common metrics in the field. Their results showed that PCGAN is capable of synthesizing high-quality point clouds for a disparate array of object classes.

SIM2REAL

Another issue that Beksi is working on is known colloquially as 'sim2real.' "You have real training data, and synthetic training data, and there can be subtle differences in how an AI system or robot learns from them," he said. "'Sim2real' looks at how to quantify those differences and make simulations more realistic by capturing the physics of that scene - friction, collisions, gravity -- and by using ray or photon tracing."

The next step for Beksi's team is to deploy the software on a robot, and see how it works in relationship to the sim-to-real domain gap.

The training of the PCGAN model was made possible by TACC's Maverick 2 deep learning resource, which Beksi and his students were able to access through the University of Texas Cyberinfrastructure Research (UTRC) program, which provides computing resources to researchers at any of the UT System's 14 institutions.

"If you want to increase resolution to include more points and more detail, that increase comes with an increase in computational cost," he noted. "We don't have those hardware resources in my lab, so it was essential to make use of TACC to do that."

In addition to computation needs, Beksi required extensive storage for the research. "These datasets are huge, especially the 3D point clouds," he said. "We generate hundreds of megabytes of data per second; each point cloud is around 1 million points. You need an enormous amount of storage for that."

While Beksi says the field is still a long way from having really good robust robots that can be autonomous for long periods of time, doing so would benefit multiple domains, including health care, manufacturing, and agriculture.

"The publication is just one small step toward the ultimate goal of generating synthetic scenes of indoor environments for advancing robotic perception capabilities," he said.

INFORMATION:

Following a series of studies on termite mound physiology and morphogenesis over the past decade, researchers at the Harvard John A. Paulson School of Engineering and Applied Sciences have now developed a mathematical model to help explain how termites construct their intricate mounds.

The research is published in the Proceedings of the National Academy of Sciences.

"Termite mounds are amongst the greatest examples of animal architecture on our planet," said L. Mahadevan, the Lola England de Valpine Professor of Applied Mathematics, of Organismic and Evolutionary Biology, and ...

Computer simulations hold tremendous promise to accelerate the molecular engineering of green energy technologies, such as new systems for electrical energy storage and solar energy usage, as well as carbon dioxide capture from the environment. However, the predictive power of these simulations depends on having a means to confirm that they do indeed describe the real world.

Such confirmation is no simple task. Many assumptions enter the setup of these simulations. As a result, the simulations must be carefully checked by using an appropriate "validation protocol" involving experimental measurements.

"We focused on a solid/liquid interface because interfaces are ubiquitous in materials, and those between oxides and water are key in many energy applications." -- Giulia Galli, theorist ...

New Rochelle, NY, January 19, 2021--Gene editing therapies, including CRISPR-Cas systems, offer the potential to correct mutations causing inherited retinal degenerations, a leading cause of blindness. Technological advances in gene editing, continuing safety concerns, and strategies to overcome these challenges are highlighted in the peer-reviewed journal Human Gene Therapy. Click here to read the full-text article free on the Human Gene Therapy website.

"Currently, the field is undergoing rapid development with a number of competing gene editing strategies, including allele-specific knock-down, base editing, prime editing, and RNA editing, are under investigation. Each offers a ...

ITHACA, N.Y. - When the semester shifted online amid the COVID-19 pandemic last spring, Cornell University instructor Mark Sarvary, and his teaching staff decided to encourage - but not require - students to switch on their cameras.

It didn't turn out as they'd hoped.

"Most of our students had their cameras off," said Sarvary, director of the Investigative Biology Teaching Laboratories in the College of Agriculture and Life Sciences (CALS).

"Students enjoy seeing each other when they work in groups. And instructors like seeing students, because it's a way to assess whether or not they understand the material," Sarvary said. "When we switched to online learning, that component got lost. We wanted to investigate the reasons ...

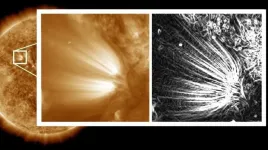

Scientists have combined NASA data and cutting-edge image processing to gain new insight into the solar structures that create the Sun's flow of high-speed solar wind, detailed in new research published today in The Astrophysical Journal. This first look at relatively small features, dubbed "plumelets," could help scientists understand how and why disturbances form in the solar wind.

The Sun's magnetic influence stretches billions of miles, far past the orbit of Pluto and the planets, defined by a driving force: the solar wind. This constant outflow of solar material carries the Sun's magnetic field out into space, where it shapes the environments around Earth, other worlds, and in the reaches of deep space. Changes in the solar wind ...

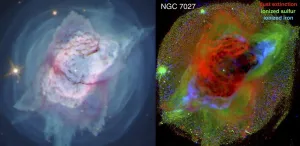

Images of two iconic planetary nebulae taken by the Hubble Space Telescope are revealing new information about how they develop their dramatic features. Researchers from Rochester Institute of Technology and Green Bank Observatory presented new findings about the Butterfly Nebula (NGC 6302) and the Jewel Bug Nebula (NGC 7027) at the 237th meeting of the American Astronomical Society on Friday, Jan. 15.

Hubble's Wide Field Camera 3 observed the nebulae in 2019 and early 2020 using its full, panchromatic capabilities, and the astronomers involved in the project ...

New high-resolution structures of the bacterial ribosome determined by researchers at the University of Illinois Chicago show that a single water molecule may be the cause -- and possible solution -- of antibiotic resistance.

The findings of the new UIC study are published in the journal Nature Chemical Biology.

Pathogenic germs become resistant to antibiotics when they develop the ability to defeat the drugs designed to kill them. Each year in the U.S., millions of people suffer from antibiotic-resistant infections, and thousands of people die as a result.

Developing new drugs is a key way the scientific community is trying to reduce the impact of antibiotic resistance.

"The first thing we need to do to make improved drugs is to better understand how antibiotics work and how 'bad ...

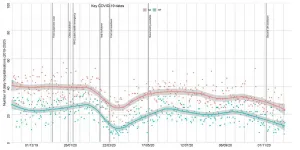

Data analysis is revealing a second sharp drop in the number of people admitted to hospital in England with acute heart failure or a heart attack.

The decline began in October as the numbers of COVID-19 infections began to surge ahead of the second lockdown, which came into force in early November.

The findings, from a research group led by the University of Leeds, have been revealed in a letter to the Journal of the American College of Cardiology.

The decline - 41 percent fewer people attending with heart failure and 34 percent with a heart attack compared to pre-pandemic levels ...

The human brain has about as many neurons as glial cells. These are divided into four major groups: the microglia, the astrocytes, the NG2 glial cells, and the oligodendrocytes. Oligodendrocytes function primarily as a type of cellular insulating tape: They form long tendrils, which consist largely of fat-like substances and do not conduct electricity. These wrap around the axons, which are the extensions through which the nerve cells send their electrical impulses. This prevents short circuits and accelerates signal forwarding.

Astrocytes, on the other hand, supply the nerve cells with energy: Through their appendages they come into contact with blood vessels and absorb glucose from these. ...

AMES, Iowa - Even if you weren't a physics major, you've probably heard something about the Higgs boson.

There was the title of a 1993 book by Nobel laureate Leon Lederman that dubbed the Higgs "The God Particle." There was the search for the Higgs particle that launched after 2009's first collisions inside the Large Hadron Collider in Europe. There was the 2013 announcement that Peter Higgs and Francois Englert won the Nobel Prize in Physics for independently theorizing in 1964 that a fundamental particle - the Higgs - is the source of mass in subatomic ...