(Press-News.org) COLUMBUS, Ohio – Systems controlled by next-generation computing algorithms could give rise to better and more efficient machine learning products, a new study suggests.

Using machine learning tools to create a digital twin, or a virtual copy, of an electronic circuit that exhibits chaotic behavior, researchers found that they were successful at predicting how it would behave and using that information to control it.

Many everyday devices, like thermostats and cruise control, utilize linear controllers – which use simple rules to direct a system to a desired value. Thermostats, for example, employ such rules to determine how much to heat or cool a space based on the difference between the current and desired temperatures.

Yet because of how straightforward these algorithms are, they struggle to control systems that display complex behavior, like chaos.

As a result, advanced devices like self-driving cars and aircraft often rely on machine learning-based controllers, which use intricate networks to learn the optimal control algorithm needed to best operate. However, these algorithms have significant drawbacks, the most demanding of which is that they can be extremely challenging and computationally expensive to implement.

Now, having access to an efficient digital twin is likely to have a sweeping impact on how scientists develop future autonomous technologies, said Robert Kent, lead author of the study and a graduate student in physics at The Ohio State University.

“The problem with most machine learning-based controllers is that they use a lot of energy or power and they take a long time to evaluate,” said Kent. “Developing traditional controllers for them has also been difficult because chaotic systems are extremely sensitive to small changes.”

These issues, he said, are critical in situations where milliseconds can make a difference between life and death, such as when self-driving vehicles must decide to brake to prevent an accident.

The study was published recently in Nature Communications.

Compact enough to fit on an inexpensive computer chip capable of balancing on your fingertip and able to run without an internet connection, the team’s digital twin was built to optimize a controller’s efficiency and performance, which researchers found resulted in a reduction of power consumption. It achieves this quite easily, mainly because it was trained using a type of machine learning approach called reservoir computing.

“The great thing about the machine learning architecture we used is that it’s very good at learning the behavior of systems that evolve in time,” Kent said. “It’s inspired by how connections spark in the human brain.”

Although similarly sized computer chips have been used in devices like smart fridges, according to the study, this novel computing ability makes the new model especially well-equipped to handle dynamic systems such as self-driving vehicles as well as heart monitors, which must be able to quickly adapt to a patient’s heartbeat.

“Big machine learning models have to consume lots of power to crunch data and come out with the right parameters, whereas our model and training is so extremely simple that you could have systems learning on the fly,” he said.

To test this theory, researchers directed their model to complete complex control tasks and compared its results to those from previous control techniques. The study revealed that their approach achieved a higher accuracy at the tasks than its linear counterpart and is significantly less computationally complex than a previous machine learning-based controller.

“The increase in accuracy was pretty significant in some cases,” said Kent. Though the outcome showed that their algorithm does require more energy than a linear controller to operate, this tradeoff means that when it is powered up, the team’s model lasts longer and is considerably more efficient than current machine learning-based controllers on the market.

“People will find good use out of it just based on how efficient it is,” Kent said. “You can implement it on pretty much any platform and it’s very simple to understand.” The algorithm was recently made available to scientists.

Outside of inspiring potential advances in engineering, there’s also an equally important economic and environmental incentive for creating more power-friendly algorithms, said Kent.

As society becomes more dependent on computers and AI for nearly all aspects of daily life, demand for data centers is soaring, leading many experts to worry over digital systems’ enormous power appetite and what future industries will need to do to keep up with it.

And because building these data centers as well as large-scale computing experiments can generate a large carbon footprint, scientists are looking for ways to curb carbon emissions from this technology.

To advance their results, future work will likely be steered toward training the model to explore other applications like quantum information processing, Kent said. In the meantime, he expects that these new elements will reach far into the scientific community.

“Not enough people know about these types of algorithms in the industry and engineering, and one of the big goals of this project is to get more people to learn about them,” said Kent. “This work is a great first step toward reaching that potential.”

This study was supported by the U.S. Air Force’s Office of Scientific Research. Other Ohio State co-authors include Wendson A.S. Barbosa and Daniel J. Gauthier.

#

Contact: Robert Kent, Kent.321@osu.edu

Written by: Tatyana Woodall, Woodall.52@osu.edu

END

University of Leeds news release

Embargoed until 1900 BST, 9 May 2024

How climate change will affect malaria transmission

A new model for predicting the effects of climate change on malaria transmission in Africa could lead to more targeted interventions to control the disease according to a new study.

Previous methods have used rainfall totals to indicate the presence of surface water suitable for breeding mosquitoes, but the research led by the University of Leeds used several climatic and hydrological models to include real-world processes of evaporation, infiltration and flow through rivers.

This ...

Machine learning enables cheaper and safer low-power magnetic resonance imaging (MRI) without sacrificing accuracy, according to a new study. According to the authors, these advances pave the way for affordable, patient-centric, and deep learning-powered ultra-low-field (ULF) MRI scanners, addressing unmet clinical needs in diverse healthcare settings worldwide. Magnetic Resonance Imaging (MRI) has revolutionized healthcare, offering noninvasive and radiation-free imaging. It holds immense promise for advancing medical diagnoses through artificial intelligence. However, despite its five decades of development, MRI remains largely inaccessible, particularly ...

Areas at risk for malaria transmission in Africa may decline more than previously expected because of climate change in the 21st century, suggests an ensemble of environmental and hydrologic models. The combined models predicted that the total area of suitable malaria transmission will start to decline in Africa after 2025 through 2100, including in West Africa and as far east as South Sudan. The new study’s approach captures hydrologic features that are typically missed with standard predictive models of malaria transmission, offering a more nuanced view that could inform malaria control efforts in a warming world. Most of the burden of malaria falls on people living ...

Sea surface temperature anomalies in the Indian Ocean predict the magnitude of global dengue epidemics, according to a new study. The findings suggest that the climate indicator could enhance the forecasting and planning for outbreak responses. Dengue – a mosquito-borne flavivirus disease – affects nearly half the world’s population. Currently, there are no specific drugs or vaccines for the disease, and outbreaks can have serious public health and economic impacts. As a result, the ability to predict the risk of outbreaks and prepare accordingly is crucial for many regions where ...

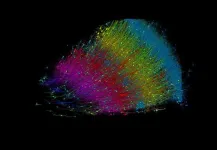

Using more than 1.4 petabytes of electron microscopy (EM) imaging data, researchers have generated a nanoscale-resolution reconstruction of a millimeter-scale fragment of human cerebral cortex, providing an unprecedented view into the structural organization of brain tissue at the supracellular, cellular, and subcellular levels. The human brain is a vastly complex organ and, to date, little is known about its cellular microstructure, including the synaptic and neural circuits it supports. Disruption of these circuits is known to play a role in myriad brain disorders. Yet studying human brain ...

The highest performing countries across public health outcomes share many drivers that contribute to their success. That’s the conclusion of a new study published May 9 in the open-access journal PLOS Global Public Health by Dr. Nadia Akseer, an Epidemiologist-Biostatistician at Johns Hopkins Bloomberg School of Public Health and co-author of the study and colleagues in the Exemplars in Global Health (EGH) program.

In recent years, the EGH program has begun to identify and study positive outliers when it comes to global health programs around the world, with an aim of uncovering not only which health interventions work, ...

As the world is transitioning from a fossil fuel-based energy economy, many are betting on hydrogen to become the dominant energy currency. But producing “green” hydrogen without using fossil fuels is not yet possible on the scale we need because it requires iridium, a metal that is extremely rare. In a study published May 10 in Science, researchers led by Ryuhei Nakamura at the RIKEN Center for Sustainable Resource Science (CSRS) in Japan report a new method that reduces the amount of iridium needed for the reaction by 95%, without altering the rate of hydrogen production. This breakthrough could revolutionize our ability to produce ecologically ...

An international team of researchers has discovered that the quantum particles responsible for the vibrations of materials—which influence their stability and various other properties—can be classified through topology. Phonons, the collective vibrational modes of atoms within a crystal lattice, generate disturbances that propagate like waves through neighboring atoms. These phonons are vital for many properties of solid-state systems, including thermal and electrical conductivity, neutron scattering, and quantum phases like charge density waves and superconductivity.

The spectrum of phonons—essentially ...

A cubic millimeter of brain tissue may not sound like much. But considering that tiny square contains 57,000 cells, 230 millimeters of blood vessels, and 150 million synapses, all amounting to 1,400 terabytes of data, Harvard and Google researchers have just accomplished something enormous.

A Harvard team led by Jeff Lichtman, the Jeremy R. Knowles Professor of Molecular and Cellular Biology and newly appointed dean of science, has co-created with Google researchers the largest synaptic-resolution, 3D reconstruction of a piece of human brain to date, showing in vivid detail each cell and its web of neural connections in a piece of human ...

Superfast levitating trains, long-range lossless power transmission, faster MRI machines — all these fantastical technological advances could be in our grasp if we could just make a material that transmits electricity without resistance — or ‘superconducts’ — at around room temperature.

In a paper published in the May 10 issue of Science, researchers report a breakthrough in our understanding of the origins of superconductivity at relatively high (though still frigid) temperatures. The findings concern a class of superconductors that has puzzled scientists since 1986, called ‘cuprates.’

“There was tremendous excitement when ...