(Press-News.org) Large language models (LLMs) are increasingly automating tasks like translation, text classification and customer service. But tapping into an LLM’s power typically requires users to send their requests to a centralized server — a process that’s expensive, energy-intensive and often slow.

Now, researchers have introduced a technique for compressing an LLM’s reams of data, which could increase privacy, save energy and lower costs.

The new algorithm, developed by engineers at Princeton and Stanford Engineering, works by trimming redundancies and reducing the precision of an LLM’s layers of information. This type of leaner LLM could be stored and accessed locally on a device like a phone or laptop and could provide performance nearly as accurate and nuanced as an uncompressed version.

“Any time you can reduce the computational complexity, storage and bandwidth requirements of using AI models, you can enable AI on devices and systems that otherwise couldn’t handle such compute- and memory-intensive tasks,” said study coauthor Andrea Goldsmith, dean of Princeton’s School of Engineering and Applied Science and Arthur LeGrand Doty Professor of Electrical and Computer Engineering.

“When you use ChatGPT, whatever request you give it goes to the back-end servers of OpenAI, which process all of that data, and that is very expensive,” said coauthor Rajarshi Saha, a Stanford Engineering Ph.D. student. “So, you want to be able to do this LLM inference using consumer GPUs [graphics processing units], and the way to do that is by compressing these LLMs.” Saha’s graduate work is coadvised by Goldsmith and coauthor Mert Pilanci, an assistant professor at Stanford Engineering.

The researchers will present their new algorithm CALDERA, which stands for Calibration Aware Low precision DEcomposition with low Rank Adaptation, at the Conference on Neural Information Processing Systems (NeurIPS) in December. Saha and colleagues began this compression research not with LLMs themselves, but with the large collections of information that are used to train LLMs and other complex AI models, such as those used for image classification. This technique, a forerunner to the new LLM compression approach, was published in 2023.

Training data sets and AI models are both composed of matrices, or grids of numbers that are used to store data. In the case of LLMs, these are called weight matrices, which are numerical representations of word patterns learned from large swaths of text.

“We proposed a generic algorithm for compressing large data sets or large matrices,” said Saha. “And then we realized that nowadays, it’s not just the data sets that are large, but the models being deployed are also getting large. So, we could also use our algorithm to compress these models.”

While the team’s algorithm is not the first to compress LLMs, its novelty lies in an innovative combination of two properties, one called “low-precision,” the other “low-rank.” As digital computers store and process information as bits (zeros and ones), “low-precision” representation reduces the number of bits, speeding up storage and processing while improving energy efficiency. On the other hand, “low-rank” refers to reducing redundancies in the LLM weight matrices.

“Using both of these properties together, we are able to get much more compression than either of these techniques can achieve individually,” said Saha.

The team tested their technique using Llama 2 and Llama 3, open-source large language models released by Meta AI, and found that their method, which used low-rank and low-precision components in tandem with each other, can be used to improve other methods which use just low-precision. The improvement can be up to 5%, which is significant for metrics that measure uncertainty in predicting word sequences.

They evaluated the performance of the compressed language models using several sets of benchmark tasks for LLMs. The tasks included determining the logical order of two statements, or answering questions involving physical reasoning, such as how to separate an egg white from a yolk or how to make a cup of tea.

“I think it’s encouraging and a bit surprising that we were able to get such good performance in this compression scheme,” said Goldsmith, who moved to Princeton from Stanford Engineering in 2020. “By taking advantage of the weight matrix rather than just using a generic compression algorithm for the bits that are representing the weight matrix, we were able to do much better.”

Using an LLM compressed in this way could be suitable for situations that don’t require the highest possible precision. Moreover, the ability to fine-tune compressed LLMs on edge devices like a smartphone or laptop enhances privacy by allowing organizations and individuals to adapt models to their specific needs without sharing sensitive data with third-party providers. This reduces the risk of data breaches or unauthorized access to confidential information during the training process. To enable this, the LLMs must initially be compressed enough to fit on consumer-grade GPUs.

Saha also cautioned that running LLMs on a smartphone or laptop could hog the device’s memory for a period of time. “You won’t be happy if you are running an LLM and your phone drains out of charge in an hour,” said Saha. Low-precision computation can help reduce power consumption, he added. “But I wouldn’t say that there’s one single technique that solves all the problems. What we propose in this paper is one technique that is used in combination with techniques proposed in prior works. And I think this combination will enable us to use LLMs on mobile devices more efficiently and get more accurate results.”

The paper, “Compressing Large Language Models using Low Rank and Low Precision Decomposition,” will be presented at the Conference on Neural Information Processing Systems (NeurIPS) in December 2024. In addition to Goldsmith, Saha and Pilanci, coauthors include Stanford Engineering researchers Naomi Sagan and Varun Srivastava. This work was supported in part by the U.S. National Science Foundation, the U.S. Army Research Office, and the Office of Naval Research.

END

Leaner large language models could enable efficient local use on phones and laptops

2024-11-18

ELSE PRESS RELEASES FROM THIS DATE:

‘Map of Life’ team wins $2 million prize for innovative rainforest tracking

2024-11-18

Traditionally, taking inventory of the species in a rainforest requires sending in a team of experts with field guides and binoculars for a multi-day expedition. But the devastating pace of the destruction of the world’s rainforests and increasing urgency to better monitor and protect what remains demand faster, easier, and more efficient approaches.

Several years ago, a Yale-based team devised an alternate approach: they use lightweight, unmanned aerial vehicles (UAVs) to collect this critical biodiversity data in remote areas.

Now they’ve collected ...

Rise in pancreatic cancer cases among young adults may be overdiagnosis

2024-11-18

Embargoed for release until 5:00 p.m. ET on Monday 18 November 2024

@Annalsofim

Below please find summaries of new articles that will be published in the next issue of Annals of Internal Medicine. The summaries are not intended to substitute for the full articles as a source of information. This information is under strict embargo and by taking it into possession, media representatives are committing to the terms of the embargo not only on their own behalf, but also on behalf of the organization they represent. ...

New study: Short-lived soda tax reinforces alternative presumptions on tax impacts on consumer behaviors

2024-11-18

Key Takeaway:

When policymakers enact consumption taxes to raise revenue for the government, consumers who oppose the tax may decrease their consumption more, leading to a reduction in tax revenue.

BALTIMORE, MD, November 18, 2024 – One of the most common assumptions tax policymakers make is that by raising taxes, they will raise revenue for the government. However, a new study that centers on a soda tax in Washington state has reinforced alternative presumptions about tax impacts on consumer behaviors.

Researchers found that when Washington state enacted a tax on soda, it not only generated backlash in the consumer marketplace and political ...

Fewer than 1 in 5 know the 988 suicide lifeline

2024-11-18

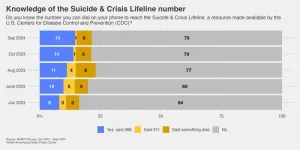

PHILADELPHIA – Annenberg Public Policy Center survey data show that public recall of the 988 Suicide & Crisis Lifeline number has grown slowly since the three-digit phone number was introduced in July 2022. Just 15% of U.S. adults are familiar with it, as of September 2024.

Survey respondents who accurately report awareness of the Suicide & Crisis Lifeline number increased significantly from August 2023 (11%) to September 2024 (15%). Those 15% of respondents reported both that they knew the number and, when asked in an open-ended format, said the number ...

Semaglutide eligibility across all current indications for US adults

2024-11-18

About The Study: A total of nearly 137 million adults, representing more than half of all U.S. adults, are eligible for semaglutide therapy. This exceeds the number of adults eligible for statins (approximately 82 million), currently the most prescribed pharmaceuticals among U.S. adults.

Corresponding Author: To contact the corresponding author, Dhruv S. Kazi, MD, MS, email dkazi@bidmc.harvard.edu.

To access the embargoed study: Visit our For The Media website at this link https://media.jamanetwork.com/

(doi:10.1001/jamacardio.2024.4657)

Editor’s Note: Please see the article for additional information, including other authors, author contributions ...

Can podcasts create healthier habits?

2024-11-18

Whether it’s ABC Listen’s Health Report or Mamamia’s But Are You Happy, podcasts have fast become a part of our everyday media consumption. In fact, the average person spends more than five hours a week listening to them. But could listening to podcasts lead to healthier habits?

In the first study of its kind, University of South Australia researchers have explored just this, finding that podcasts can significantly improve health knowledge, increase exercise levels, and boost healthy eating.

Reviewing ...

Zerlasiran—A small-interfering RNA targeting lipoprotein(a)

2024-11-18

About The Study: Zerlasiran, a small-interfering RNA targeting hepatic synthesis of apolipoprotein(a), was well-tolerated and reduced time-averaged lipoprotein(a) concentration by more than 80% during 36 weeks of treatment in patients with atherosclerotic cardiovascular disease.

Corresponding Author: To contact the corresponding author, Steven E. Nissen, MD, email nissens@ccf.org.

To access the embargoed study: Visit our For The Media website at this link https://media.jamanetwork.com/

(doi:10.1001/jama.2024.21957)

Editor’s ...

Anti-obesity drugs, lifestyle interventions show cardiovascular benefits beyond weight loss

2024-11-18

Popular anti-obesity drugs continue to show cardiovascular benefits beyond weight loss, according to several new papers published in JACC, the flagship journal of the American College of Cardiology, that are being simultaneously presented at the American Heart Association’s 2024 Scientific Sessions. JACC is publishing two secondary analyses on the impact of GLP-1 medications in improving cardiac structure and function in heart failure patients and cardiovascular outcomes in those who previously had cardiac bypass surgery, ...

Oral muvalaplin for lowering of lipoprotein(a)

2024-11-18

About The Study: Muvalaplin, an oral small molecule lipoprotein(a) inhibitor, reduced lipoprotein(a) measured using intact lipoprotein(a) and apolipoprotein(a)-based assays and was well tolerated. The effect of muvalaplin on cardiovascular events requires further investigation.

Corresponding Author: To contact the corresponding author, Stephen J. Nicholls, MBBS, PhD, email stephen.nicholls@monash.edu.

To access the embargoed study: Visit our For The Media website at this link https://media.jamanetwork.com/

(doi:10.1001/jama.2024.24017)

Editor’s Note: Please see the article for additional information, including ...

Revealing the hidden costs of what we eat

2024-11-18

(Santa Barbara, Calif.) — Shifting our diets to be more sustainable can be a powerful way for each of us to address both climate change and global food insecurity, however making such adjustments at the large scales necessary to make a difference globally can be a delicate matter.

“Changes in food demand in one part of the world can have cascading environmental and human welfare implications for people around the world),” said Joe DeCesaro, data analyst at UC Santa Barbara’s National Center for Ecological Analysis & Synthesis (NCEAS).

Despite the seemingly daunting complexity of the global food system, to ensure a healthy population ...