Accurate object pose estimation refers to the ability of a robot to determine both the position and orientation of an object. It is essential for robotics, especially in pick-and-place tasks, which are crucial in industries such as manufacturing and logistics. As robots are increasingly tasked with complex operations, their ability to precisely determine the six degrees of freedom (6D pose) of objects, position, and orientation, becomes critical. This ability ensures that robots can interact with objects in a reliable and safe manner. However, despite advancements in deep learning, the performance of 6D pose estimation algorithms largely depends on the quality of the data they are trained on.

A new study led by Associate Professor Phan Xuan Tan, College of Engineering, Shibaura Institute of Technology, Japan, along with his team of researchers, Dr. Van-Truong Nguyen, Mr. Cong-Duy Do, and Dr. Thanh-Lam Bui from the Hanoi University of Industry, Vietnam, Associate Professor Thai-Viet Dang from Hanoi University of Science and Technology, Vietnam, introduces a meticulously designed dataset aimed at enhancing the performance of 6D pose estimation algorithms. This dataset addresses a major gap in robotic grasping and automation research by providing a comprehensive resource that allows robots to perform tasks with higher precision and adaptability in real-world environments. This study was made available online on November 23, 2024, and published in Volume 24 of the journal Results in Engineering in December 2024.

Assoc. Prof. Tan exclaims, “Our goal was to create a dataset that not only advances research but also addresses practical challenges in industrial robotic automation. We hope it serves as a valuable resource for researchers and engineers alike.”

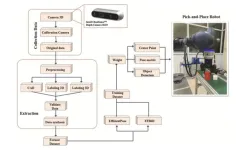

The research team created a dataset that not only met the demands of the research community but is also applicable in practical industrial settings. Using the Intel RealSenseTM depth D435 camera, they captured high-quality RGB and depth images, annotating each with 6D pose data rotation and translation of the objects. The dataset features a variety of shapes and sizes, with data augmentation techniques added to ensure its versatility across diverse environmental conditions. This approach makes the dataset highly applicable to a wide range of robotic applications.

“Our dataset was carefully designed to be practical for industries. By including objects with varying shapes and environmental variables, it provides a valuable resource not only for researchers but also for engineers working in fields where robots operate in dynamic and complex conditions,” adds Assoc. Prof. Tan.

The dataset was evaluated using state-of-the-art deep learning models, EfficientPose and FFB6D, achieving accuracy rates of 97.05% and 98.09%, respectively. The high accuracy rates prove that the dataset provides reliable and precise pose information, which is crucial for applications such as robotic manipulation, quality control in manufacturing, and autonomous vehicles. The strong performance of these algorithms on the dataset underscores the potential for improving robotic systems that require precision.

Assoc. Prof. Tan states, “While our dataset includes a range of basic shapes like rectangular prisms, trapezoids, and cylinders, expanding it to include more complex and irregular objects would make it more applicable for real-world scenarios”. Further, he adds, “While the Intel RealSenseTM Depth D435 camera offers excellent depth and RGB data, the reliance of the dataset on it may limit its accessibility for researchers who do not have access to the same equipment.”

Despite these challenges, the researchers are optimistic about the impact of the dataset. The results clearly demonstrate that a well-designed dataset significantly improves the performance of 6D pose estimation algorithms, allowing robots to perform more complex tasks with higher precision and efficiency.

“The results are worth the effort!”, exclaims Assoc. Prof. Tan. Looking ahead, the team plans to expand the dataset by incorporating a broader variety of objects and automating parts of the data collection process to make it more efficient and accessible. These efforts aim to further enhance the applicability and utility of the dataset, benefiting both researchers and industries that rely on robotic automation.

***

Reference

DOI: https://doi.org/10.1016/j.rineng.2024.103459

About Shibaura Institute of Technology (SIT), Japan

Shibaura Institute of Technology (SIT) is a private university with campuses in Tokyo and Saitama. Since the establishment of its predecessor, Tokyo Higher School of Industry and Commerce, in 1927, it has maintained “learning through practice” as its philosophy in the education of engineers. SIT was the only private science and engineering university selected for the Top Global University Project sponsored by the Ministry of Education, Culture, Sports, Science and Technology and had received support from the ministry for 10 years starting from the 2014 academic year. Its motto, “Nurturing engineers who learn from society and contribute to society,” reflects its mission of fostering scientists and engineers who can contribute to the sustainable growth of the world by exposing their over 9,500 students to culturally diverse environments, where they learn to cope, collaborate, and relate with fellow students from around the world.

Website: https://www.shibaura-it.ac.jp/en/

About Associate Professor Phan Xuan Tan from SIT, Japan

Dr. Phan Xuan Tan is an Associate Professor at the College of Engineering, Shibaura Institute of Technology (SIT), Japan. He holds a B.E. in Electrical-Electronic Engineering, an M.S. in Computer and Communication Engineering, and a Ph.D. in Functional Control Systems. Notably, he served as Vice Director of the Innovative Global Program at SIT and is a recognized expert in computer vision, deep learning, image processing, and robotics. His work has significantly contributed to improving industrial robotics systems.

Funding Information

This research was funded by Vingroup Innovation Foundation (VINIF) under project code VINIF.2023.DA089.

END