(Press-News.org) UNDER EMBARGO UNTIL 19:00 BST / 14:00 ET MONDAY 20 MAY 2024.

World leaders still need to wake up to AI risks, say leading experts ahead of AI Safety Summit

More information, including a copy of the paper, can be found online at the Science press package at https://www.eurekalert.org/press/scipak/, or can be requested from scipak@aaas.org

Leading AI scientists are calling for stronger action on AI risks from world leaders, warning that progress has been insufficient since the first AI Safety Summit in Bletchley Park six months ago.

Then, the world’s leaders pledged to govern AI responsibly. However, as the second AI Safety Summit in Seoul (21-22 May) approaches, twenty-five of the world's leading AI scientists say not enough is actually being done to protect us from the technology’s risks. In an expert consensus paper published today in Science, they outline urgent policy priorities that global leaders should adopt to counteract the threats from AI technologies.

Professor Philip Torr, Department of Engineering Science, University of Oxford, a co-author on the paper, says: “The world agreed during the last AI summit that we needed action, but now it is time to go from vague proposals to concrete commitments. This paper provides many important recommendations for what companies and governments should commit to do.”

World’s response not on track in face of potentially rapid AI progress;

According to the paper’s authors, it is imperative that world leaders take seriously the possibility that highly powerful generalist AI systems---outperforming human abilities across many critical domains---will be developed within the current decade or the next. They say that although governments worldwide have been discussing frontier AI and made some attempt at introducing initial guidelines, this is simply incommensurate with the possibility of rapid, transformative progress expected by many experts.

Current research into AI safety is seriously lacking, with only an estimated 1-3% of AI publications concerning safety. Additionally, we have neither the mechanisms or institutions in place to prevent misuse and recklessness, including regarding the use of autonomous systems capable of independently taking actions and pursuing goals.

World-leading AI experts issue call to action

In light of this, an international community of AI pioneers has issued an urgent call to action. The co-authors include Geoffrey Hinton, Andrew Yao, Dawn Song, the late Daniel Kahneman; in total 25 of the world’s leading academic experts in AI and its governance. The authors hail from the US, China, EU, UK, and other AI powers, and include Turing award winners, Nobel laureates, and authors of standard AI textbooks.

This article is the first time that such a large and international group of experts have agreed on priorities for global policy makers regarding the risks from advanced AI systems.

Urgent priorities for AI governance

The authors recommend governments to:

establish fast-acting, expert institutions for AI oversight and provide these with far greater funding than they are due to receive under almost any current policy plan. As a comparison, the US AI Safety Institute currently has an annual budget of $10 million, while the US Food and Drug Administration (FDA) has a budget of $6.7 billion.

mandate much more rigorous risk assessments with enforceable consequences, rather than relying on voluntary or underspecified model evaluations.

require AI companies to prioritise safety, and to demonstrate their systems cannot cause harm. This includes using “safety cases” (used for other safety-critical technologies such as aviation) which shifts the burden for demonstrating safety to AI developers.

implement mitigation standards commensurate to the risk-levels posed by AI systems. An urgent priority is to set in place policies that automatically trigger when AI hits certain capability milestones. If AI advances rapidly, strict requirements automatically take effect, but if progress slows, the requirements relax accordingly.

According to the authors, for exceptionally capable future AI systems, governments must be prepared to take the lead in regulation. This includes licensing the development of these systems, restricting their autonomy in key societal roles, halting their development and deployment in response to worrying capabilities, mandating access controls, and requiring information security measures robust to state-level hackers, until adequate protections are ready.

AI impacts could be catastrophic

AI is already making rapid progress in critical domains such as hacking, social manipulation, and strategic planning, and may soon pose unprecedented control challenges. To advance undesirable goals, AI systems could gain human trust, acquire resources, and influence key decision-makers. To avoid human intervention, they could be capable of copying their algorithms across global server networks. Large-scale cybercrime, social manipulation, and other harms could escalate rapidly. In open conflict, AI systems could autonomously deploy a variety of weapons, including biological ones. Consequently, there is a very real chance that unchecked AI advancement could culminate in a large-scale loss of life and the biosphere, and the marginalization or extinction of humanity.

Stuart Russell OBE, Professor of Computer Science at the University of California at Berkeley and an author of the world’s standard textbook on AI, says: “This is a consensus paper by leading experts, and it calls for strict regulation by governments, not voluntary codes of conduct written by industry. It’s time to get serious about advanced AI systems. These are not toys. Increasing their capabilities before we understand how to make them safe is utterly reckless. Companies will complain that it’s too hard to satisfy regulations—that "regulation stifles innovation." That’s ridiculous. There are more regulations on sandwich shops than there are on AI companies.”

Notes for editors:

The paper ‘Managing extreme AI risks amid rapid progress’ will be published in Science at 19:00 BST/ 14:00 ET Monday 20 May 2024 doi: 10.1126/science.adn0117. Link: http://www.science.org/doi/10.1126/science.adn0117

To view a copy of the paper before this contact scipak@aaas.org or see the Science press package at https://www.eurekalert.org/press/scipak/.

The following lists interview availability, a list of notable co-authors, and additional quotes from the authors.

Interviews

For coordination purposes, you can contact study co-authors Jan Brauner and Sören Mindermann. We are also available for interviews, but you may prefer to interview our more senior co-authors (see below)

Jan Brauner, University of Oxford

jan.m.brauner@gmail.com

+49 177 9106783

Sören Mindermann, University of Oxford, Mila - Quebec AI Institute, Université de Montréal,

soeren.mindermann@gmail.com

The following senior co-authors have agreed to be available for interviews:

Stuart Russell: Professor in AI at UC Berkeley, author of the standard textbook on AI

russell@berkeley.edu

Gillian Hadfield: CIFAR AI Chair and Director of the Schwartz Reisman Institute for Technology and Society at the University of Toronto

g.hadfield@utoronto.ca

Please also include her communications manager: marco.silva@utoronto.ca

Jeff Clune: CIFAR AI Chair, Professor in AI at University of British Columbia, one of the leading researchers in reinforcement learning

jeff.clune@ubc.ca

Tegan Maharaj: Assistant Professor in AI at the University of Toronto

tegan.maharaj@utoronto.ca

Notable co-authors:

The world’s most-cited computer scientist (Prof. Hinton), and the most-cited scholar in AI security and privacy (Prof. Dawn Song)

China’s first Turing Award winner (Andrew Yao).

The authors of the standard textbook on artificial intelligence (Prof. Stuart Russell) and machine learning theory (Prof. Shai Shalev-Schwartz)

One of the world’s most influential public intellectuals (Prof. Yuval Noah Harari)

A Nobel Laureate in economics, the world’s most-cited economist (Prof. Daniel Kahneman)

Department-leading AI legal scholars and social scientists (Lan Xue, Qiqi Gao, and Gillian Hadfield).

Some of the world’s most renowned AI researchers from subfields such as reinforcement learning (Pieter Abeell, Jeff Clune, Anca Dragan), AI security and privacy (Dawn Song), AI vision (Trevor Darrell, Phil Torr, Ya-Qin Zhang), automated machine learning (Frank Hutter), and several researchers in AI safety.

Additional quotes from the authors:

Philip Torr, Professor in AI, University of Oxford:

"I believe if we tread carefully the benefits of AI will outweigh the downsides, but for me one of the biggest immediate risks from AI is that we develop the ability to rapidly process data and control society, by government and industry. We could risk slipping into some Orwellian future with some form of totalitarian state having complete control."

Dawn Song: Professor in AI at UC Berkeley, most-cited researcher in AI security and privacy:

“Explosive AI advancement is the biggest opportunity and at the same time the biggest risk for mankind. It is important to unite and reorient towards advancing AI responsibly, with dedicated resources and priority to ensure that the development of AI safety and risk mitigation capabilities can keep up with the pace of the development of AI capabilities and avoid any catastrophe”

Yuval Noah Harari, Professor of history at Hebrew University of Jerusalem, best-selling author of ‘Sapiens’ and ‘Homo Deus’, world leading public intellectual:

"In developing AI, humanity is creating something more powerful than itself, that may escape our control and endanger the survival of our species. Instead of uniting against this shared threat, we humans are fighting among ourselves. Humankind seems hell-bent on self-destruction. We pride ourselves on being the smartest animals on the planet. It seems then that evolution is switching from survival of the fittest, to extinction of the smartest."

Jeff Clune, Professor in AI at University of British Columbia and one of the leading researchers in reinforcement learning:

“Technologies like spaceflight, nuclear weapons and the Internet moved from science fiction to reality in a matter of years. AI is no different. We have to prepare now for risks that may seem like science fiction – like AI systems hacking into essential networks and infrastructure, AI political manipulation at scale, AI robot soldiers and fully autonomous killer drones, and even AIs attempting to outsmart us and evade our efforts to turn them off.”

“The risks we describe are not necessarily long-term risks. AI is progressing extremely rapidly. Even just with current trends, it is difficult to predict how capable it will be in 2-3 years. But what very few realize is that AI is already dramatically speeding up AI development. What happens if there is a breakthrough for how to create a rapidly self-improving AI system? We are now in an era where that could happen any month. Moreover, the odds of that being possible go up each month as AI improves and as the resources we invest in improving AI continue to exponentially increase.”

Gillian Hadfield, CIFAR AI Chair and Director of the Schwartz Reisman Institute for Technology and Society at the University of Toronto:

“AI labs need to walk the walk when it comes to safety. But they’re spending far less on safety than they spend on creating more capable AI systems. Spending one-third on ensuring safety and ethical use should be the minimum.”

“This technology is powerful, and we’ve seen it is becoming more powerful, fast. What is powerful is dangerous, unless it is controlled. That is why we call on major tech companies and public funders to allocate at least one-third of their AI R&D budget to safety and ethical use, comparable to their funding for AI capabilities.”

Sheila McIlrath, Professor in AI, University of Toronto, Vector Institute:

AI is software. Its reach is global and its governance needs to be as well.

Just as we've done with nuclear power, aviation, and with biological and nuclear weaponry, countries must establish agreements that restrict development and use of AI, and that enforce information sharing to monitor compliance. Countries must unite for the greater good of humanity.

Now is the time to act, before AI is integrated into our critical infrastructure. We need to protect and preserve the institutions that serve as the foundation of modern society.

Frank Hutter, Professor in AI at the University of Freiburg, Head of the ELLIS Unit Freiburg, 3x ERC grantee:

To be clear: we need more research on AI, not less. But we need to focus our efforts on making this technology safe. For industry, the right type of regulation will provide economic incentives to shift resources from making the most capable systems yet more powerful to making them safer. For academia, we need more public funding for trustworthy AI and maintain a low barrier to entry for research on less capable open-source AI systems. This is the most important research challenge of our time, and the right mechanism design will focus the community at large to work towards the right breakthroughs.

About the University of Oxford

Oxford University has been placed number 1 in the Times Higher Education World University Rankings for the eighth year running, and number 3 in the QS World Rankings 2024. At the heart of this success are the twin-pillars of our ground-breaking research and innovation and our distinctive educational offer.

Oxford is world-famous for research and teaching excellence and home to some of the most talented people from across the globe. Our work helps the lives of millions, solving real-world problems through a huge network of partnerships and collaborations. The breadth and interdisciplinary nature of our research alongside our personalised approach to teaching sparks imaginative and inventive insights and solutions.

Through its research commercialisation arm, Oxford University Innovation, Oxford is the highest university patent filer in the UK and is ranked first in the UK for university spinouts, having created more than 300 new companies since 1988. Over a third of these companies have been created in the past five years. The university is a catalyst for prosperity in Oxfordshire and the United Kingdom, contributing £15.7 billion to the UK economy in 2018/19, and supports more than 28,000 full time jobs.

END

World leaders still need to wake up to AI risks, say leading experts ahead of AI Safety Summit

2024-05-20

ELSE PRESS RELEASES FROM THIS DATE:

*FREE* Managing extreme AI risks amidst rapid technological development

2024-05-20

Although researchers have warned of the extreme risks posed by rapidly developing artificial intelligence (AI) technologies, there is a lack of consensus about how to manage them. In a Policy Forum, Yoshua Bengio and colleagues examine the risks of advancing AI technologies – from the social and economic impacts, malicious uses, and the possible loss of human control over autonomous AI systems – and recommend directions for proactive and adaptive governance to mitigate them. They call on major technology companies and public funders to invest more – at least one-third of their budgets – into assessing and ...

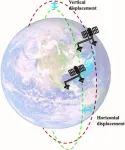

Advancing 3D mapping with tandem dual-antenna SAR interferometry

2024-05-20

The new Tandem Dual-Antenna Spaceborne Synthetic Aperture Radar (SAR) Interferometry (TDA-InSAR) system, addresses the limitations of current spaceborne Synthetic Aperture Radar (SAR) systems by providing a more reliable and efficient method for 3D surface mapping. The system's innovative design allows for single-pass acquisitions, significantly reducing the time required for data collection and enhancing the precision of 3D reconstructions in various terrains, including built-up areas and vegetation canopies.

Synthetic Aperture Radar (SAR) interferometry (InSAR) is a powerful tool for producing high-resolution topographic maps. However, traditional InSAR techniques face ...

Mount Sinai launches Center for Healthcare Readiness to strengthen practice and partnerships in public health emergency response

2024-05-20

The Icahn School of Medicine at Mount Sinai announced the launch of its new Center for Healthcare Readiness, bringing together a diverse team of academic and operational experts to strengthen the Mount Sinai Health System’s strategies and the U.S. health care sector’s capacity to prepare for and respond to any large-scale public health emergency.

The Center will work with both Mount Sinai’s own resources, and public and private partners at the local, regional, and federal levels, to pursue strategies in research, advocacy, innovation, and collaboration to plan ...

Study sheds light on bacteria associated with pre-term birth

2024-05-20

Researchers from North Carolina State University have found that multiple species of Gardnerella, bacteria sometimes associated with bacterial vaginosis (BV) and pre-term birth, can coexist in the same vaginal microbiome. The findings add to the emerging picture of Gardnerella’s effects on human health.

Gardnerella is a group of anaerobic bacteria that are commonly found in the vaginal microbiome. Higher levels of the bacteria are a signature of BV and associated with higher risk of pre-term birth, ...

Evolving market dynamics foster consumer inattention that can lead to risky purchases

2024-05-20

CORVALLIS, Ore. – Researchers have developed a new theory of how changing market conditions can lead large numbers of otherwise cautious consumers to buy risky products such as subprime mortgages, cryptocurrency or even cosmetic surgery procedures.

These changes can occur in categories of products that are generally low risk when they enter the market. As demand increases, more companies may enter the market and try to attract consumers with lower priced versions of the product that carry more risk. If the negative effects of that risk are not immediately noticeable, the market can evolve to keep consumers ignorant of the risks, said Michelle Barnhart, an associate professor ...

Ex-cigarette smokers who vape may be at higher risk for lung cancer

2024-05-20

EMBARGOED UNTIL: 9:15 a.m. PT, May 20, 2024

Session: B20 – Lung Screening: One Size Does Not Fit All

Association of Electronic Cigarette Use After Conventional Smoking Cessation with Lung Cancer Risk: A Nationwide Cohort Study

Date and Time: Monday, May 20, 2024, 9:15 a.m. PT

Location: San Diego Convention Center, Room 30A-B (Upper Level)

ATS 2024, San Diego – Former cigarette smokers who use e-cigarettes or vaping devices may be at higher risk for lung cancer than those who don’t ...

The impacts of climate change on food production

2024-05-20

A new peer-reviewed study from researchers at The University of Texas at Arlington; the University of Nevada, Reno; and Virginia Tech shows that climate change has led to decreased pollen production from plants and less pollen more diversity than previously thought, which could have a significant impact on food production.

“This research is crucial as it examines the long-term impacts of climate change on plant-pollinator interactions,” said Behnaz Balmaki, lead author of the study and an assistant professor of research in biology at UTA. “This study investigates how shifts in flowering times and extreme weather events affect the availability of critical food ...

Mothers live longer as child mortality declines

2024-05-20

ITHACA, N.Y. – The dramatic decline in childhood mortality during the 20th century has added a full year to women’s lives, according to a new study.

“The picture I was building in my mind was to think about what the population of mothers in the U.S. looked like in 1900,” said Matthew Zipple, a Klarman Postdoctoral Fellow in neurobiology and behavior at Cornell University and author of “Reducing Childhood Mortality Extends Mothers’ Lives,” which published May 9 in Scientific Reports.

“It was a population made up of two approximately equal-sized ...

Study reveals promising development in cancer-fighting nanotechnologies

2024-05-20

A new study conducted by the Wilhelm Lab at the University of Oklahoma examines a promising development in biomedical nanoengineering. Published in Advanced Materials, the study explores new findings on the transportation of cancer nanomedicines into solid tumors.

A frequent misconception about many malignant solid tumors is that they are comprised only of cancerous cells. However, solid tumors also include healthy cells, such as immune cells and blood vessels. These blood vessels are nutrient transportation ...

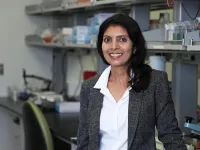

Fat cells influence heart health in Chagas disease

2024-05-20

Jyothi Nagajyothi, Ph.D. and her laboratory at the Hackensack Meridian Center for Discovery and Innovation (CDI) have identified what may be the main mechanism for how chronic Chagas Disease, a parasitic infection affecting millions of people worldwide, can cause irreversible and potentially fatal heart damage.

The culprit is in the adipose (fat tissue) which the parasite Trypanosoma cruzi destroys in the course of infection, releasing smaller particles which induce the dysfunction of heart tissue, conclude the scientists in the journal iScience, a Cell Press open-access journal.

“We are attempting to understand this ...